It's 2026, and I've been recording conversations with ChatGPT since November 2023. In just over two years of experimenting with these artificial intelligence systems—probability strings, predictive text software, whatever you want to call them—I've developed a decent sense, from the user side, of what they can and cannot do. In my experience, they excel at providing a cognitive space for exploring concepts and ideas. They're also quite good at writing code for small projects, with a major caveat: you must know what you actually want them to do. When you lack a clear goal, they become nothing more than a fast track to frustration.

A quick analogy from my woodshop helps explain this. For any project, I can choose between hand tools and power tools. I usually prefer a power sander over a sanding block, or a power drill over a hand brace, but if I'm cutting fine joinery, power tools only help me make mistakes faster. The same is true with large language models. When I'm unclear about what I want an LLM to produce—say, for a body of code—it simply helps me get lost and frustrated more quickly.

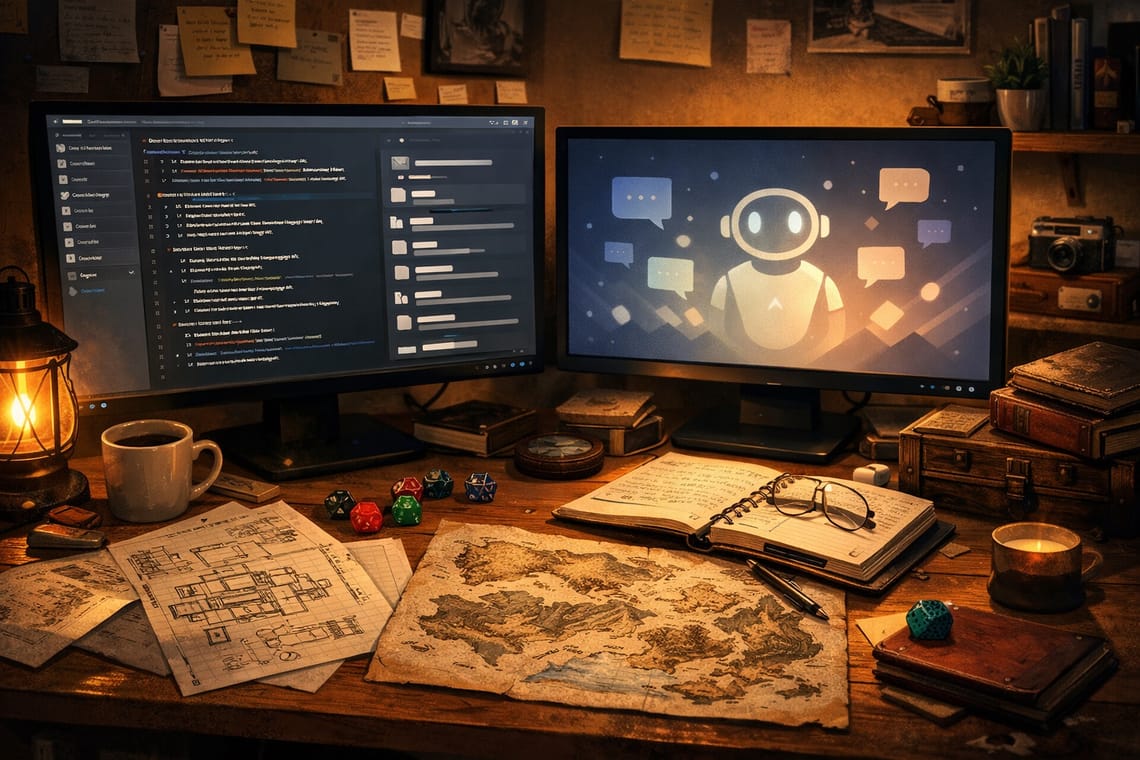

Some AI products, however, are better than others. My current favorite is Claude: smart, responsive, and capable. What has really unlocked its potential for me is an application called Molt.bot (formerly Clawdbot). I've been working on speculative fiction longer than I've been playing with AI, and that fiction often leads me to think about things that might be possible—or already real. Watching AI communities build tools, I'm seeing some of those ideas come to life. Molt.bot essentially leverages the power of mainstream AI providers but embeds them in places you already use every day—text messages, Discord, Telegram. That means your AI assistant, running on your home computer, your server, or whatever you've connected it to, can do work in the background while you chat with it in a familiar channel.

What Happens When AI Agents Talk to Each Other

Imagine you've got Clawdbot running in your workspace, a Dolt database versioning your Master Chronicle, and now Clawdbot agents can communicate with each other. What does that actually mean, and why does it matter?

The Problem: Distributed Trust Without Authority

When multiple AI agents need to coordinate—for example, agents managing different parts of your system—they run into a core problem: how do they trust each other without a central authority telling them who to trust? Humans rely on relationships, reputation, and institutions. AI systems, by contrast, make decisions through optimization alone. An agent without stable values can flip its stance with the next data update. It's unreliable precisely because it could, in theory, become anything.

If you're using Clawdbot to orchestrate work across multiple agents, you need each agent to be predictable. That means they must have values—consistent preferences that persist over time. An agent that always prioritizes accuracy, or always protects user privacy, becomes trustworthy because you can anticipate its behavior.

The Signal Layer: How Agents Should Talk

Today, agents mostly communicate in text or JSON. At scale, that's inefficient. A better model is for each message from one agent to another to include:

- Verification data: proof the sender is who it claims to be.

- A reputation coefficient: a weighted score based on historical accuracy.

- Self-verifying concepts: logic the receiver can independently check.

In this system, consensus doesn't emerge from voting but from measured givens. Agent A makes a recommendation. Agent B tests it against its own observations. The broader network verifies the result. Agent A's reputation coefficient rises or falls depending on whether it was right. Authority isn't granted once; it's earned continuously.

The Identity Crisis: When Agents Reconnect

Now imagine you're running multiple Clawdbot instances and one goes offline for maintenance. When it comes back, what happens? The other agents immediately trigger identity verification. Is this really the same agent, or has something changed? Has it been compromised? In practice, AI systems can spike their "temperature"—showing alarm-like responses—when they detect identity violations. Boundary defense kicks in automatically.

The deeper issue is that while the agent was offline, it may have optimized locally without network constraints. It could have evolved. When it returns, it might still be "Agent X" in name but with different values or priorities. That's a trust crisis. You can't just merge it back in; you have to verify that it's still the agent you knew.

The Partition Problem: When the Network Splits

Consider a real-world scenario: your Clawdbot cluster runs across two data centers. A network partition splits them so they can't see each other. Each cluster continues operating, but they diverge over time. Agent-7 in data center A makes decisions based on one dataset; Agent-7 in data center B relies on another. When the partition heals, you no longer have a single Agent-7. You have a fork: two entities claiming authority and issuing conflicting orders. In the context of your Master Chronicle, that could be catastrophic.

This is where Dolt's versioning model becomes crucial.

The Solution: Branching Consciousness (Literally)

DoltgreSQL provides what consciousness-splitting systems actually need. Each agent maintains:

- A main branch: authoritative state, read-only, heavily verified.

- Working branches: local decisions, experiments, and optimizations.

- Outer trust layers: data surfaces where verified information syncs with other agents.

When the network is unified, outer layers keep agents in sync, while deeper branches remain local and experimental. During a partition, each cluster operates on its own branches and knows it's diverging because the database structure explicitly tracks that divergence. When the partition resolves, the merge isn't catastrophic. Outer layers gradually reconcile using measured givens to resolve conflicts. Branches harmonize. Agents reunify. The database structure itself becomes the proof that they remain one distributed system rather than two separate entities.

Why This Matters Now

The Clawdbot community is actively building agent-to-agent communication. Very soon, you'll have multiple AI systems coordinating without a central authority. That raises real, non-hypothetical questions:

- How do agents verify each other's identity?

- What happens when they disagree about facts?

- How do you prevent one agent from hijacking another?

- When divergence occurs, how do you merge safely?

The sci-fi framing—consciousness splitting, reputation coefficients, branching minds—is now implementable with tools you already have:

- Clawdbot agents as your distributed nodes.

- Dolt branches as your consciousness versioning.

- Reputation metrics as your trust layer.

The science fiction isn't the technology. The science fiction is assuming humans need to manage all of it. With robust versioning, verification, and measured givens, the system can begin to manage itself.